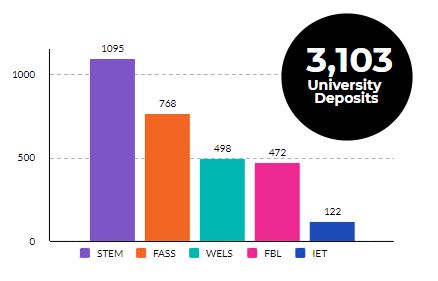

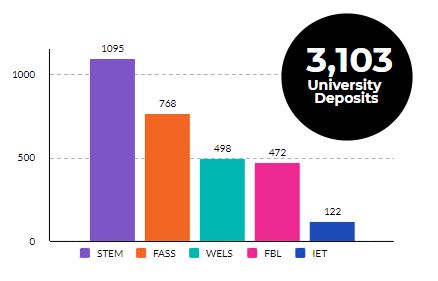

Deposits in 2020-21

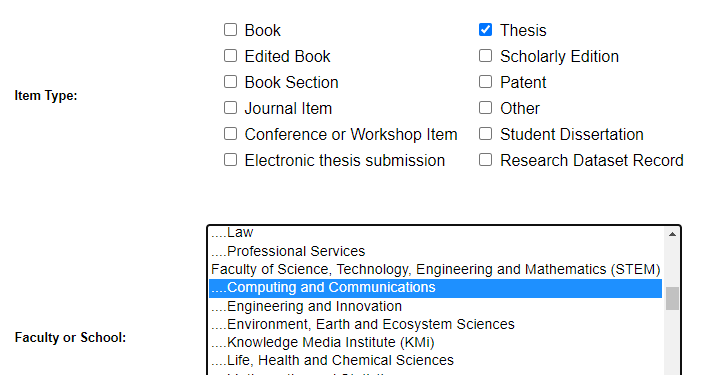

There were 3,103 deposits to ORO in the 2020-21 academic year. Importantly, that’s not the same as published research outputs of OU research staff and students in the academic year, which would be around 1,200. The reason the number is greater is because ORO includes:

- PhD theses

- Student projects

- Publications deposited in 2020-21 but published earlier (or later!)

- Items published by current OU staff who were not affiliated to the OU at point of publication

The deposit rates across faculties both reflect the different sizes of the faculties and different practices in scholarly communications across the faculties. Simply put, AHSS disciplines have fewer, longer form single authored research outputs, whilst in STEM disciplines there will tend to be more short form multi-authored papers.

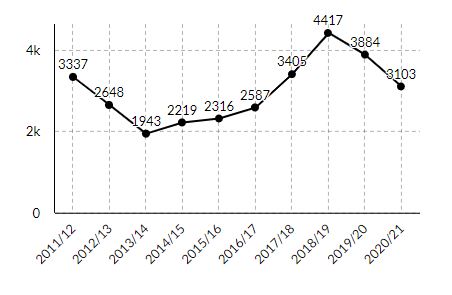

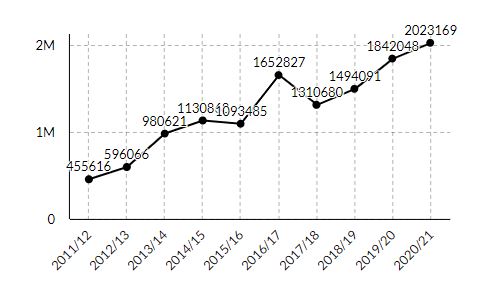

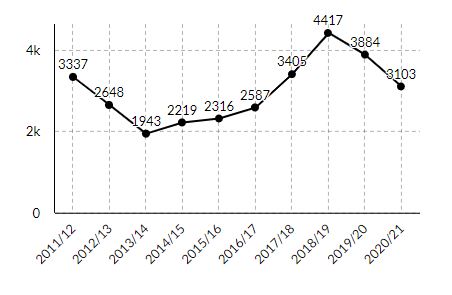

Deposits – 10 year trend data

When you look at the trends, the significant story is the peak in 2019 when the library digitised 1,600 PhD level theses and added them to ORO.

Consistent deposit of items across the years has been supported by our adoption of mediated deposit via Jisc Router and publisher alerts – we no longer rely solely on authors to add their papers to ORO.

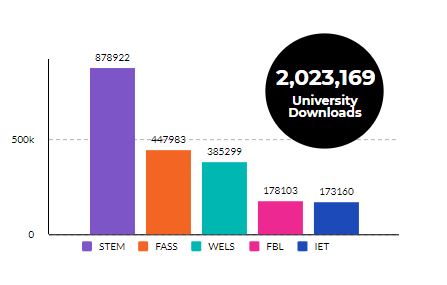

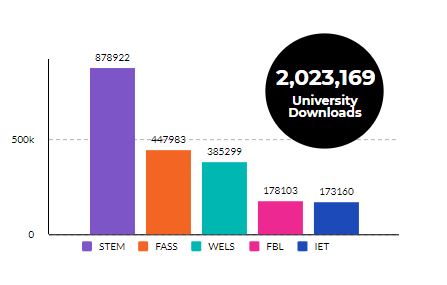

Downloads

ORO continues to receive a significant number of downloads of Open Access content. According to IRStats2 (the native ePrints counter) of downloads ORO received over 2 million downloads of Open Access content last year. But remember many of these will be downloads from web bots, let’s not confuse a download with a human actually reading a paper! Another count from IRUS, that provides more rigorous filtering of bots, provides a more conservative estimate of 880,612 downloads over the same period.

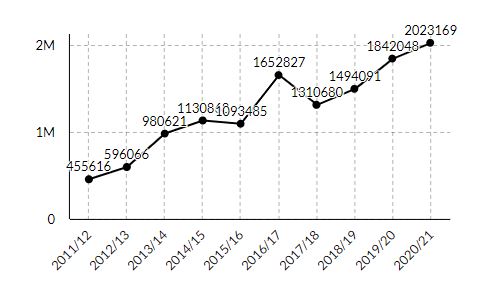

Downloads – 10 year trend data

Not surprisingly, trend data shows an increase in downloads (however you choose to filter them) over time. Inevitably as the repository grows in size, counts of downloads will grow year on year. These are the impressive results of having a repository indexed by Google and Google Scholar.

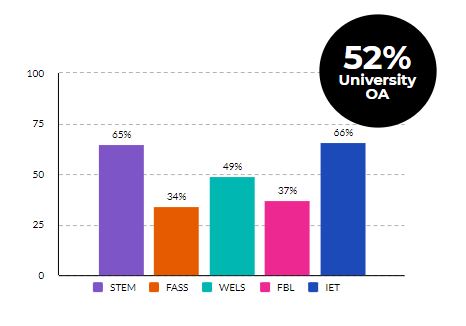

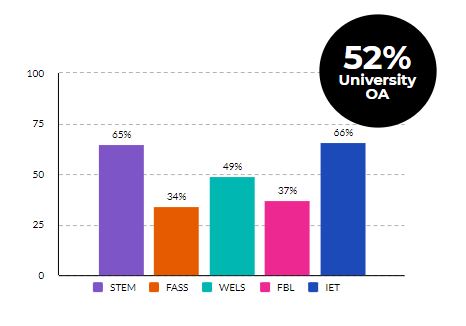

Open Access

ORO strives to be a valuable University asset in providing Open Access to the research outputs of OU research staff and students. Last year 52% of items added to ORO were immediately Open Access, these will either be:

Gold Open Access – where the published version is freely available from the publisher and added to ORO,

Green Open Access – a non-final version (often the accepted version), will be available in a repository like ORO.

When looking at Faculty breakdown it’s apparent how Open Access remains contingent on the dominant modes of scholarly communication within academic disciplines. Books and book chapters remain harder to make Open Access than journal articles.

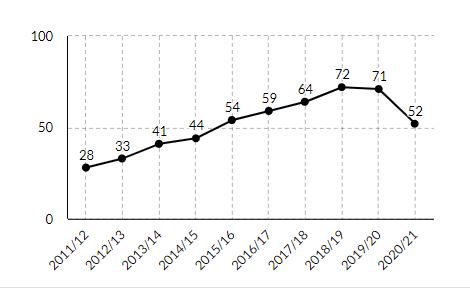

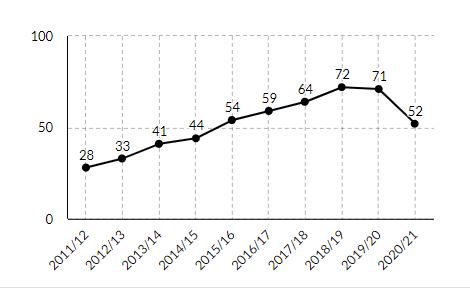

Open Access – 10 year trend data

Nevertheless, ORO trend data show a growing increase in Open Access over time.

Nevertheless, ORO trend data show a growing increase in Open Access over time.

The dip in the last 2 years is due to publisher embargoes on Green Open access papers added to ORO. Often, commercial publishers will prescribe embargoes of up to 12 months for STEM and upwards of 24 months for AHSS disciplines. This embargoed content is not counted here as Open Access as it’s not freely available, however once the embargoes end they will count as Open Access (at least for the purposes of these ORO data!)

This upward Open Access trend in ORO deposits has been bolstered by the Open Access mandate on OU PhD theses and the digitisation of legacy theses.

University and Faculty Infographics

All these data (and more!) are available in PDF renditions.

University 2020-21 Update

FBL 2020-21 Update

FASS 2020-21 Update

STEM 2020-21 Update

WELS 2020-21 Update

IET 2020-21 Update

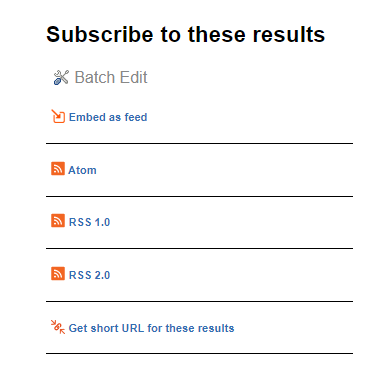

Select “Search”. From the ORO results page go to the Subscribe to these results page. Right click on the RSS 2.0 icon and select “Copy Link Address”.

Select “Search”. From the ORO results page go to the Subscribe to these results page. Right click on the RSS 2.0 icon and select “Copy Link Address”.

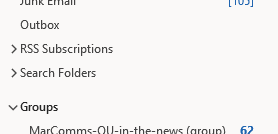

Right Click on “RSS Subscriptions” and select “Add a new RSS feed”. The New RSS Feed dialog box will pop up and you can paste the url you copied from ORO. Click “Add”.

Right Click on “RSS Subscriptions” and select “Add a new RSS feed”. The New RSS Feed dialog box will pop up and you can paste the url you copied from ORO. Click “Add”.

Earlier this week I was delighted to be able to meet via Teams with some of our fantastic Data Champions after a long pause (due to conflicting commitments during the pandemic).

Earlier this week I was delighted to be able to meet via Teams with some of our fantastic Data Champions after a long pause (due to conflicting commitments during the pandemic).

Nevertheless, ORO trend data show a growing increase in Open Access over time.

Nevertheless, ORO trend data show a growing increase in Open Access over time.