October 18th, 2012SoLAR Storm Event – Learning Analytics Symposium

Learning Analytics symposium

Learning Analytics symposium

KMi Podium, Level 4, Berrill Building, The Open University, Milton Keynes, UK [map]

1.25-4.30pm BST, Thursday 25 October 2012 [convert times]

#StormSLA twitter archive, question archive, and visualization (thanks Martin Hawksey)

This hybrid face-to-face/webinar is part of SoLAR Storm — the new virtual research lab convened by the Society for Learning Analytics Research, to build research capacity in this new field by networking PhD researchers with each other and the wider community.

1.25pm Welcome from Simon Buckingham Shum

1.30pm – Visualising Social Learning in the SocialLearn Environment

Bieke Schreurs and Maarten de Laat (Open University, The Netherlands), Chris Teplovs (Problemshift Inc. and University of Windsor), Rebecca Ferguson and Simon Buckingham Shum (Open University UK)

DeLaat-SoLARStormOct2012.pdf / Network Analysis Tool + SocialLearn demo movie

The OUNL team will talk about work in progress from a SocialLearn research internship held by Bieke Schreurs. The Network Awareness Tool (NAT) was developed initially for rendering the normally invisible non-digital networks underpinning informal learning (in particular for teacher professional development). The work reported here describes how NAT was adapted to render social networks between informal learners in the OU’s SocialLearn platform, in which different social ties can be filtered in and out of the network visualization, and moreover, enriched with topics.

Biographies: Bieke Schreurs is an intern on the SocialLearn project, working on the development of social network analytics. Since 2010, she has worked at the Ruud de Moor Centre as a researcher and project manager. She is involved in projects to support primary and secondary schools in their professionalisation process, both in the Netherlands and internationally. Her research focus is on networked learning, and on ways to support and encourage this process.

Maarten de Laat is a full professor at the Open University of The Netherlands, and is director of the social learning research programme. His research concentrates on teacher professional development, knowledge creation through (online) social networks and the impact the technology and social design has on the way these networks work and learn. He has published and presented his work extensively in research journals, books and conferences.

Chris Teplovs is Chief Research Scientist at Problemshift, Inc. He specialises in the design and implementation of interactive analytics representations of complex data with particular emphasis on network learning, learning analytics, and formative e-assessment. He is currently a Visiting Scholar in the Faculty of Science at the University of Windsor, Canada.

2.15pm – Data Wrangling – Bridging the Gap between Analytics and Users

Gill Kirkup, The Open University, UK

Gill will talk about the ‘data wrangling’ programme that she leads. Data wranglers provide a bridge between those who produce learning analytics and those who make use of those analytics.

Biography: Gill Kirkup is a Senior Lecturer in Educational Technology in the Institute of Educational Technology at The Open University. Her research interests are in gender and lifelong learning (e-learning and distance education), students’ use of learning technologies in their domestic and work environments, and the use by home-based staff of technologies for teaching.

3pm – What Can Learning Analytics Contribute to Disabled Students’ Learning and to Accessibility in e-Learning Systems?

Martyn Cooper, The Open University, UK

MartynCooper-SoLARStormOct2012.pdf

This presentation explores the potential for learning and academic analytics in improving the accessibility in e-learning systems and in enhancing support for disabled learners:

- The accessibility focus here is on identifying problems of access to learning systems (VLEs, etc.) for disabled students. (By extension, learning analytics could possible identify other interaction issues that widely impact on learning for any student.)

- Enhanced support for disabled students could be achieved by both extending the general benefits of learning analytics to disabled students and in focusing dedicated support for them.

Several use cases are outlined in order to make the argument for learning and academic analytics as a set of tools in these areas. Ways in which these approaches could be technically achieved are outlined. Then issues highlighted that will need to be addressed if these approaches were to be deployed at enterprise level.

There will be a discussion of accessibility metrics that highlights ways in which a learning analytics approach offers some advantages over traditional accessibility evaluations against standards such as W3C’s Web Content Accessibility Guidelines (WCAG 2.0).

Biography: Martyn Cooper is a Senior Research Fellow, in the Institute of Educational Technology (IET), at The Open University in the UK. His academic background is Cybernetics and Systems Engineering.

He has a B.Sc. in Cybernetics & Control Engineering with Mathematics (from Reading University). So far he has managed to bypass all higher degrees. (It took him 12 years to get his Bachelors degree!).

His research focuses on:

- applications of new and emerging technologies to enable and empower disabled people

- effective access to a wide range of technologies by disabled people

3.45pm – Good Pedagogical Practice driving learning analytics: OpenMentor, Open Comment and SAFeSEA

Denise Whitelock, The Open University, UK

Whitelock-SoLARStormOct2012.pdf

There is a recognition that e-assessment accompanied by appropriate feedback to the student is beneficial for learning. However, one of the problems with both tutor and electronic feedback to students is that a balanced combination of socio-emotive and cognitive support is required from the teaching staff and the feedback needs to be relevant to the assigned grade. This presentation will discuss two technology-enhanced feedback systems that were based upon models of good pedagogical practice (OpenMentor and Open Comment). It will also introduce my most recent project, Supported Automated Feedback for Short Essay Answers (SAFeSEA), which involves the natural language processing problems of how to ‘understand’ a student essay well enough to provide accurate and individually targeted feedback and how to automatically generate that feedback.

Biography: Denise Whitelock is a Senior Lecturer in Information Technology specialised in building feedback models for e-assessment systems. Her e-assessment research has been funded by a number of external bodies. The most influential in informing policy was the e-Assessment Roadmap, which stressed the need for automated text recognition systems and which assisted the JISC with the planning of their e-assessment strand. Denise directed the Computer Assisted Formative Assessment Project (CAFA) at The Open University, and has investigated the use of feedback with electronic assessment systems with a large number of course teams. She has built on this expertise to construct an electronic feedback system known as eMentor, which won an Open University Teaching Award. This system provides tutors with feedback on the comments they have made on their students’ assignments and coursework. It has now been transformed into OpenMentor and the JISC are funding the transfer of this technology to Southampton University and Kings College London. She has recently been awarded an EPSRC grant to provide an effective automated interactive feedback system that yields an acceptable level of support for university students writing essays in a distance or e-learning context

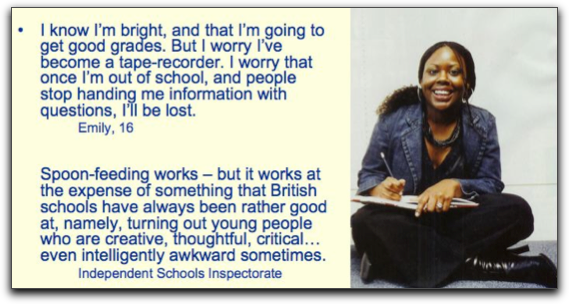

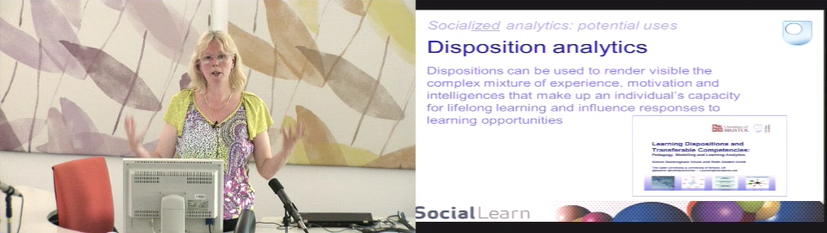

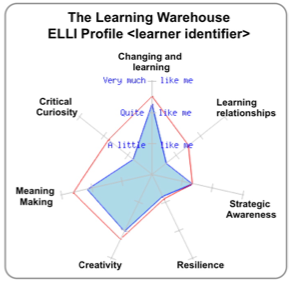

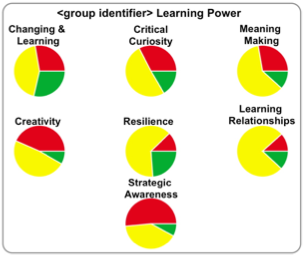

Intrinsic motivation to engage in learning (whether formal/informal, or academic/workplace) is known to be a function of a learner’s dispositions towards learning. When these are fragile, learners often disengage when challenged, and are passive, lacking vital personal qualities such as resilience, curiosity and creativity needed to thrive in today’s complex world. Learning Analytics seek to improve learning by making the optimal use of the growing amounts of data that are becoming available to, and about, learners [1,2]. Dispositional Learning Analytics seek specifically to build stronger learning dispositions (note that these are not ‘learning styles’, which have a dubious conceptual basis [3]). One particularly promising approach models dispositions as a 7-dimensional construct called Learning Power, measured through self-report data [4]. A web application generates real time personal and cohort analytics, which have been shown to impact learners, educators, and organizational leaders, and the underlying platform pools data from >50,000 profiles, which in combination with other datasets, enables deeper analytics. As a form of Social Learning Analytic [5], in combination with Discourse-Centric Analytics [6-7] and Social Network Analytics for learning [8], our strategic goal is to provide a suite of analytics that can help learners grow in Learning Power, and ultimately, build their capacity as life-long, life-wide learners.

Intrinsic motivation to engage in learning (whether formal/informal, or academic/workplace) is known to be a function of a learner’s dispositions towards learning. When these are fragile, learners often disengage when challenged, and are passive, lacking vital personal qualities such as resilience, curiosity and creativity needed to thrive in today’s complex world. Learning Analytics seek to improve learning by making the optimal use of the growing amounts of data that are becoming available to, and about, learners [1,2]. Dispositional Learning Analytics seek specifically to build stronger learning dispositions (note that these are not ‘learning styles’, which have a dubious conceptual basis [3]). One particularly promising approach models dispositions as a 7-dimensional construct called Learning Power, measured through self-report data [4]. A web application generates real time personal and cohort analytics, which have been shown to impact learners, educators, and organizational leaders, and the underlying platform pools data from >50,000 profiles, which in combination with other datasets, enables deeper analytics. As a form of Social Learning Analytic [5], in combination with Discourse-Centric Analytics [6-7] and Social Network Analytics for learning [8], our strategic goal is to provide a suite of analytics that can help learners grow in Learning Power, and ultimately, build their capacity as life-long, life-wide learners. A key next step in this research programme is to harvest and analyse data from the traces that learners leave as they engage in social digital spaces, and to explore its relationship to other data sets and data streams. This PhD will therefore fund a technically strong candidate to design, implement and evaluate “Learning Analytics for Learning Power”. The project will deploy iterative prototypes in authentic use contexts, considering OU platforms as a starting point (e.g. SocialLearn [9]; Cohere [10]; EnquiryBlogger [11]), but open to data streams from new kinds of digitally instrumented interactions (e.g. from Pervasive Computing; Augmented Reality; Quantified Self). A possible outcome is an analytics architecture open to diverse forms of input, grounded in a theoretically robust framework, generating visual analytics with transformative power for reflective learners.

A key next step in this research programme is to harvest and analyse data from the traces that learners leave as they engage in social digital spaces, and to explore its relationship to other data sets and data streams. This PhD will therefore fund a technically strong candidate to design, implement and evaluate “Learning Analytics for Learning Power”. The project will deploy iterative prototypes in authentic use contexts, considering OU platforms as a starting point (e.g. SocialLearn [9]; Cohere [10]; EnquiryBlogger [11]), but open to data streams from new kinds of digitally instrumented interactions (e.g. from Pervasive Computing; Augmented Reality; Quantified Self). A possible outcome is an analytics architecture open to diverse forms of input, grounded in a theoretically robust framework, generating visual analytics with transformative power for reflective learners. This is a fantastic opportunity if you’re passionate about the future of learning and want to engage with next generation pedagogy. You’re already a great web and database developer, and now looking to develop a research career in Learning Analytics, one of the fastest growing topics in technology-enhanced learning. Your supervisors will be

This is a fantastic opportunity if you’re passionate about the future of learning and want to engage with next generation pedagogy. You’re already a great web and database developer, and now looking to develop a research career in Learning Analytics, one of the fastest growing topics in technology-enhanced learning. Your supervisors will be