“A user focused post talking about the use case (user requirements) for the project work, how the project affects your users and how users are being engaged and reacting to the project”

The user requirements

The evidence from library users at the Open University is that a significant number find access to online library resources to be difficult and unfriendly. More than 25% of respondents to the 2010 Library Survey said that they needed help in using online library resources. The two major areas they found most difficulty with were searching for journal articles and searching databases. Amongst the open comments were a large number that indicated how hard students found search. Comments made included:

“difficult to locate a particular article – the search doesn’t always work”

“The search engine on the library is not very user friendly. I had to find a specific article recommended in the text and it took several attempts to locate it.”

“The search facility is poor and doesn’t find stuff that is supposed to be there”

Taking all the search-related open comments, the top five words used were: search – library – article – find – difficult. Five words that could be seen to sum up the user experience of library search for many people.

At the time of the Library Survey the library used a commercial federated search service. This meant that to search for articles users would first have to choose from sixteen categories, then enter their search, and then wait while the system sent the search off to a selection of online resources. They would finally get back a list of results that would trickle back over several minutes depending on how fast a response was received from the database providers. As well as federated search the library offers searches of the website, the library catalogue and provides links to the native search interfaces of hundreds of electronic journals, databases and ebooks. A complex and often changing landscape of library resources.

Difficulties with library search seem to be a common theme across the sector. See this blog post by Jane Burke from Proquest http://mhdiaz.wordpress.com/2011/03/11/the-user-expectations-gap-%e2%80%93-a-brick-wall-instead-of-an-open-door/ . Many academic libraries have taken a similar tabbed search approach to providing access to library search. In some ways the objective has been to achieve a simple ‘Google-like’ interface for users.

To start to address the feedback from users, OU Library Services replaced the federated search tool with the EBSCO Discovery Solution (EDS) in January 2011. EDS searches an aggregated index of metadata and provides a much more immediate set of relevance-ranked results from a simple ‘Google-like’ interface. To evaluate the impact of this new service the library carried out a series of 1-to-1 interviews, focus groups and surveys throughout February and March 2011 to test whether users found the new search system to be an improvement.

The RISE project arose as a result of the need to focus on ways of improving the user experience of library search. With the adoption of EDS and the availability of a search API it opened up the potential for more innovative search approaches to be taken. One such approach is to use activity data to improve the user experience by providing recommendations. This has been a common feature of many websites for several years. Amazon is possibly the best example.

As the One-Stop focus groups were happening in parallel with the RISE project, some questions on recommendations were included. Students were asked if they use recommendations and ratings and whether they would use recommendations for electronic resources. Feedback from the students at the focus groups varied depending on whether they were undergraduate or postgraduate. The feedback from the first focus group (undergraduates, a mix of level 1 and level 2 modules) was that they saw ratings and reviews from other students as being beneficial. One commented that ‘other people’s experiences are valuable’. They were particularly interested in being able to relate them to the module a student had done and suggest that they would also like to know how high a mark the student had got in their module. This suggests that some students are very focussed on achievement and saw that recommendations and ratings could help them.

The second focus group (postgraduates studying a range of Arts, Social Sciences, Education and Science modules) was more cautious about recommendations. They put a lot of important on the provenance of the recommendations, and they were interested in module-specific recommendations about which databases were best, which search-terms might get the best results.

More in-depth user feedback on recommendations was then gleaned through the RISE project. See User Engagement and User Reaction below.

User impact

The MyRecommendations and Google Gadget tools created by RISE have been made available to users as prototype and experimental tools rather than as core library products. See the Measuring Success Blog post for more details.

User engagement

RISE has sought user engagement through several avenues:

- The MyRecommendations search page and One-Stop Google Gadget have been made available through the Library website at http://library.open.ac.uk/rise/ and via the iGoogle gadgets site http://www.google.co.uk/ig/directory?type=gadgets (search for Open University Library). Users have been encouraged to try the tools through library news items and blog posts, and to feed back their comments via an online survey linked from the MyRecommendations home page.

- At a more formal level the project planned and carried out a set of 1:1 face to face interviews with 11 students. The questions were pre-approved by the Open University’s Student Research Project Panel which also granted permission to contact a selection of students. Out of 1000 students emailed, 50 agreed to take part and 52 agreed to test MyRecommendations remotely. This was a good response and highlighted an interest in the potential benefits of a recommender system.

- The formal evaluation work was supported by the use of website analytics to track user behaviour in the tools. See Measuring Success for details.

User reaction

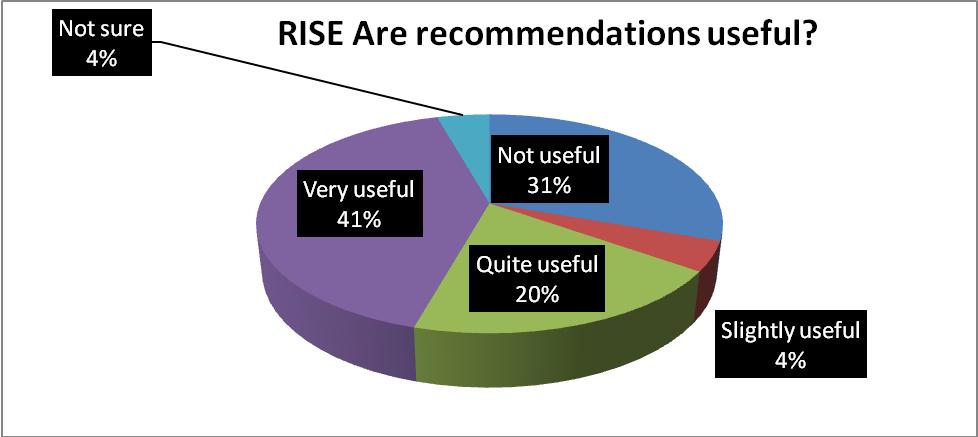

Overall, user reaction was positive towards recommender systems. All of the students interviewed during the 1:1 sessions said that they liked the idea of getting recommendations on library e-resources. 100% said they preferred course related recommendations mainly because they would be seen to be the most relevant and lead to a quick find.

“I take a really operational view you know, I’m on here, I want to get the references for this particular piece of work, and those are the people that are most likely to be doing a similar thing that I can use.” – postgraduate education student

There is also an appreciation that recommendations may give a more diverse picture of the literature on a topic:

“I think it is useful because you run out of things to actually search for….You don’t always think to look in some of the other journals… there’s so many that you can’t know them all. So I think that is a good idea. You might just think “oh I’ll try that”. It might just bring something up that you’d not thought of.” – postgraduate psychology student

People using similar search terms often viewed was seen in a good light by some interviewees who lacked confidence in using library databases:

“Yes, I would definitely use that because my limited knowledge of the library might mean that other people were using slightly different ways of searching and getting different results.” – undergraduate English literature student

If we were to implement a recommender system in the future, the students suggested the following improvements:

- Make the recommendations more obvious, using either a right hand column or a link from the top of the search results.

- Indicate the popularity of the recommendations in some way, such as X percentage of people on your module recommended A. OR 10 People viewed this and 5 found it useful.

- Indicate the currency of the recommendations. If something was recommended a year ago it may be of less interest than something recommended this week.

- In order for students to trust the recommendations, it would be helpful to be able to select the module they are currently doing searching for. This would greatly reduce cross-recommendations which are generated from people studying more than one module.

- Integrate the recommendations into the module areas on the VLE.

- When asking students to rate a recommendation, make it explicit that this rating will affect the ranking of the recommendations, e.g. “help us keep the recommendations accurate. Was this useful to you?”