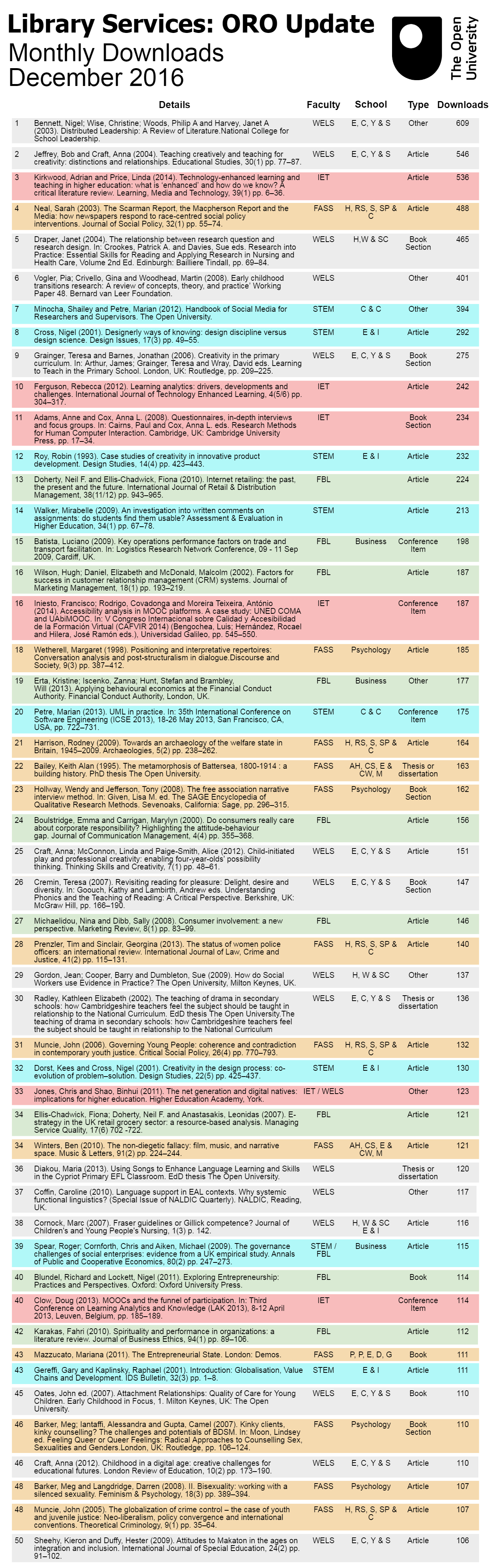

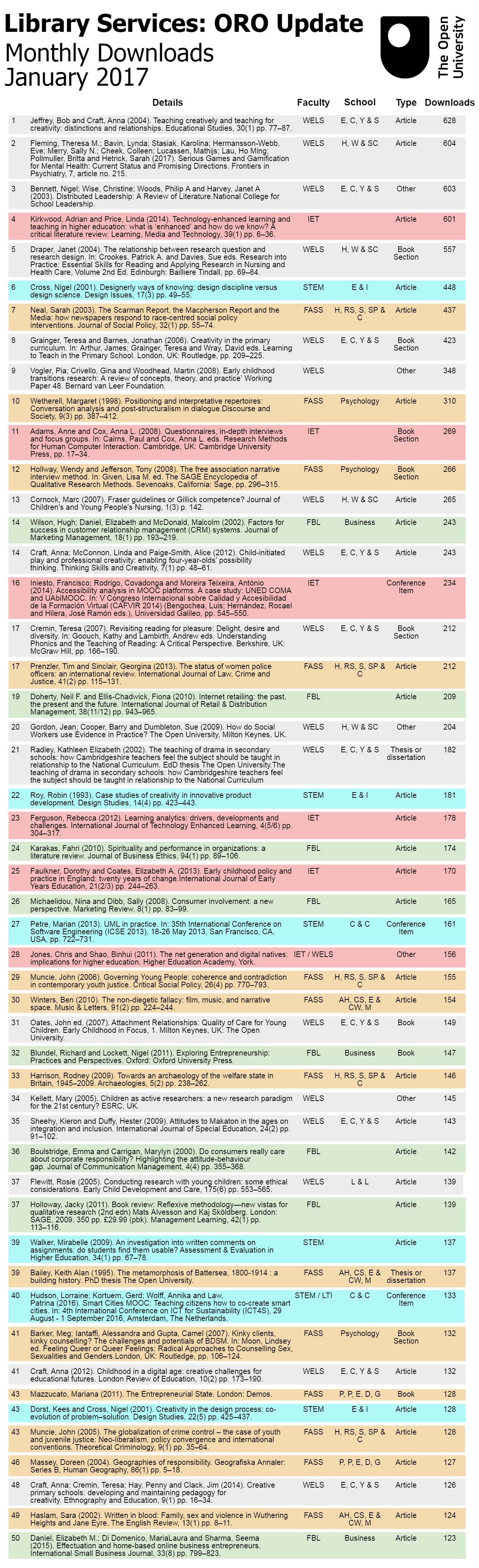

The download data of Open Access content in ORO can tell some fascinating stories, the counts from December and January are no exception… it really is amazing what you can discover with a bit of digging!

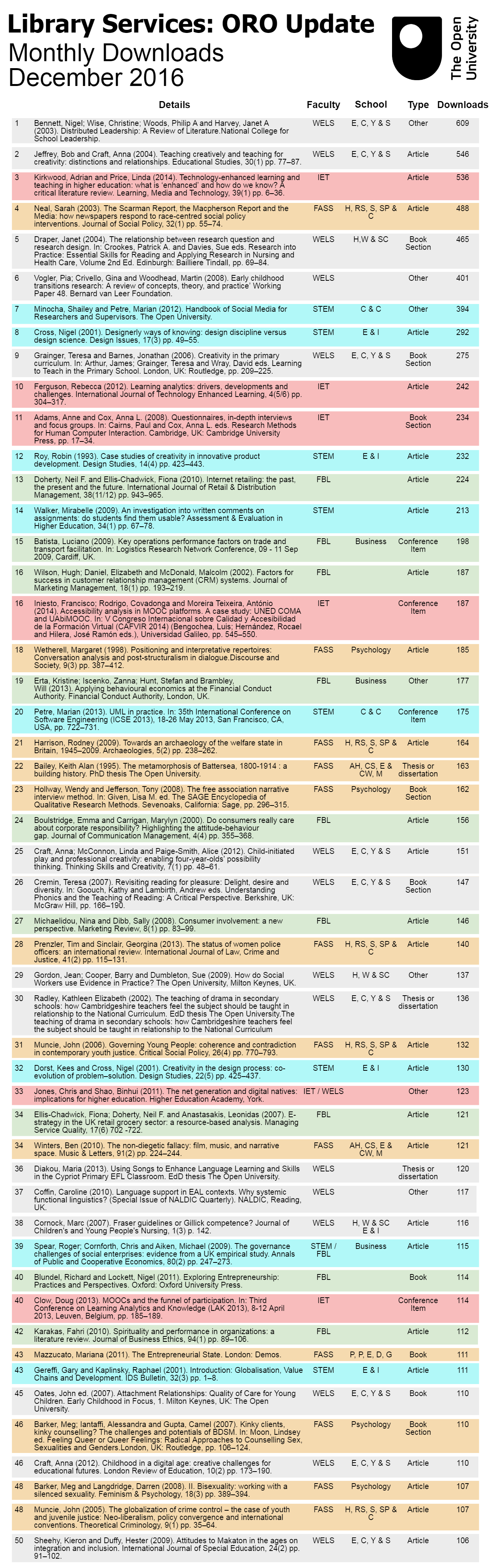

The first one that jumped out at me from the December list is a journal item published back in 2002 by Dr Sara Haslam in FASS:

Haslam, Sara (2002). Written in blood: Family, sex and violence in Wuthering Heights and Jane Eyre. The English Review, 13(1) pp. 8–11.

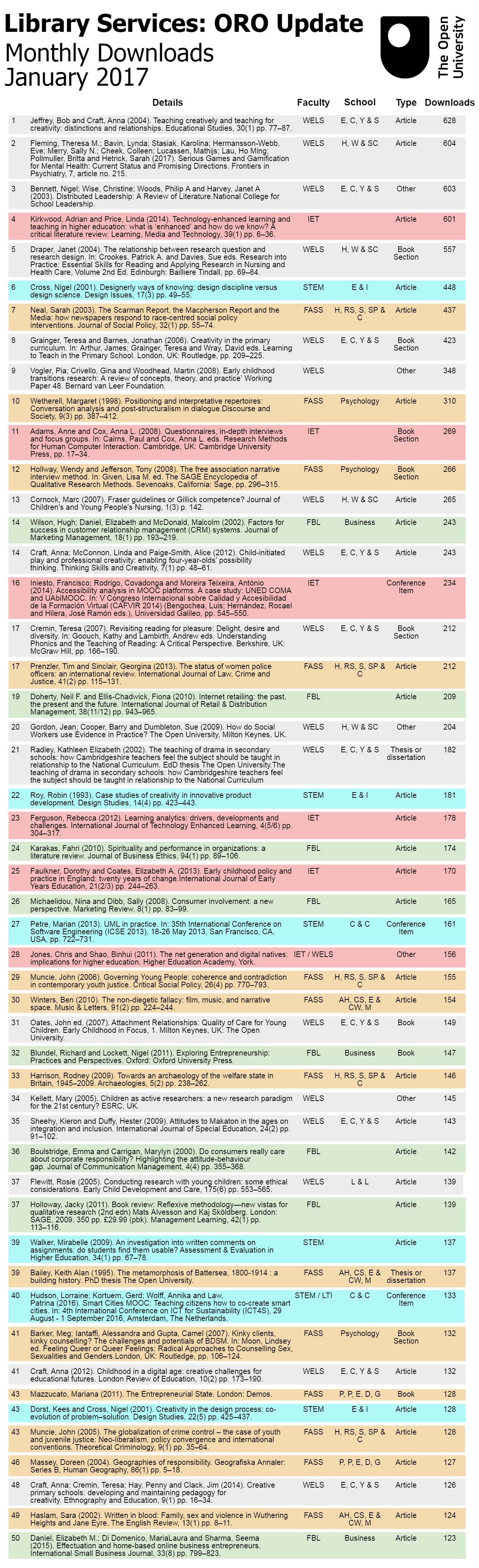

A “steady performer” that averages between 20 to 30 downloads a month. But December and January saw a spike in downloads with 100 in December and 124 in January which saw it reach the top 50 list (see below). Looking at the referrals I noticed a large amount coming from open.edu, or OpenLearn to you and me. A quick search found this page, which had a link to the ORO page for the article.

Sara was the academic consultant on the OU/BBC co-production “To Walk Invisible” and this was one of the OpenLearn pages supporting the programme – which is great connecting ORO and OpenLearn – how joined up!

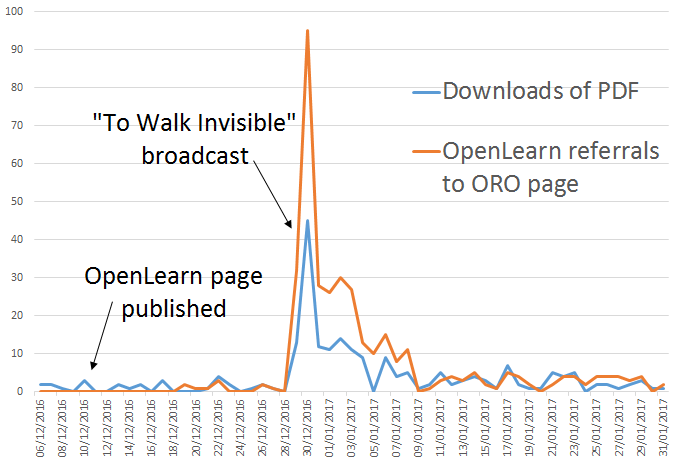

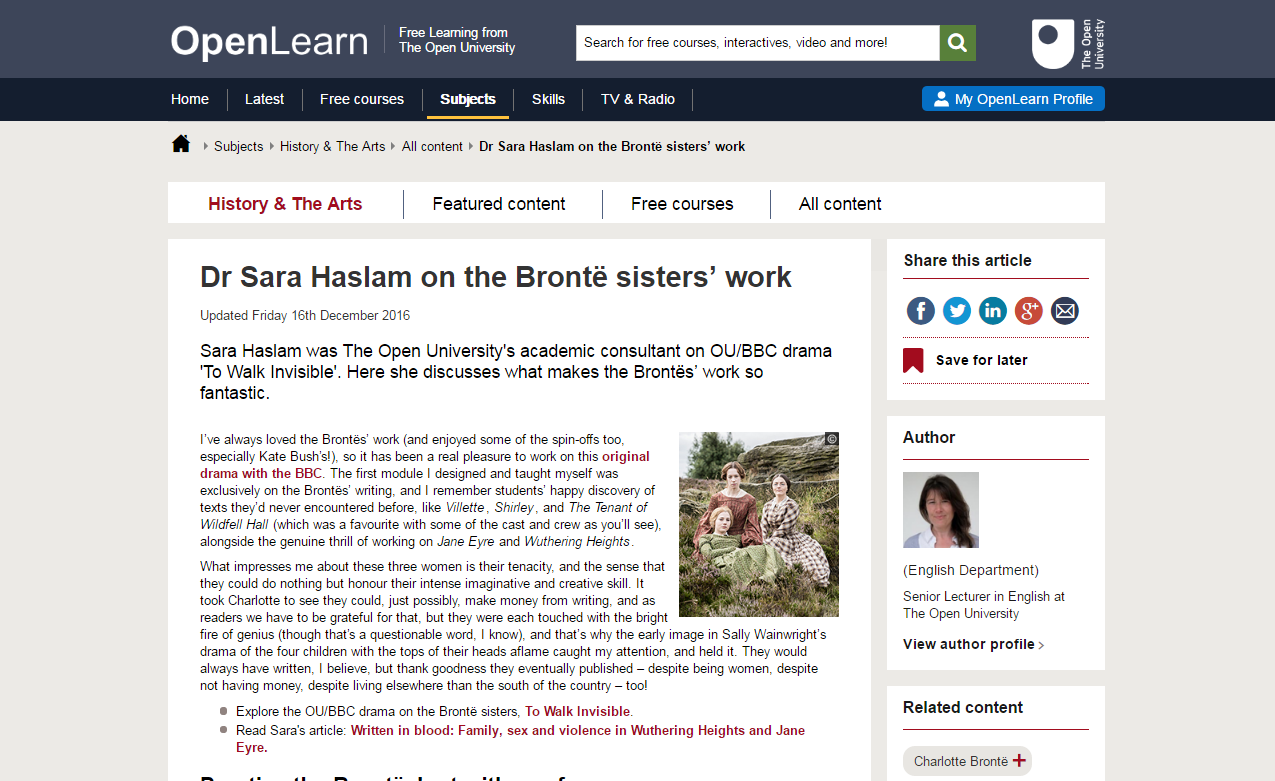

Looking at Google analytics to see how many hits the ORO page got from OpenLearn tells us the ORO page was visited 251 times in the week immediately following broadcast (29th December to January 4th). The actual PDF of the article was downloaded 115 times. So, roughly, half the visitors coming to ORO from OpenLearn, were interested enough to download the paper!

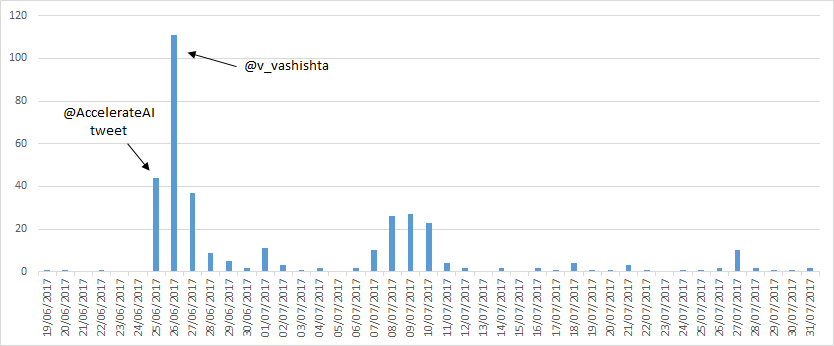

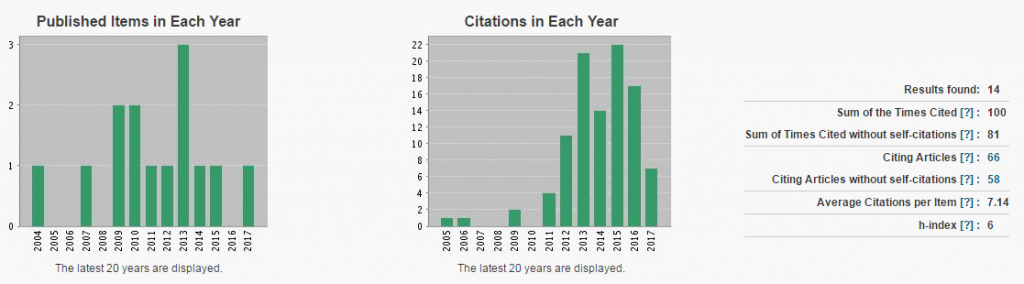

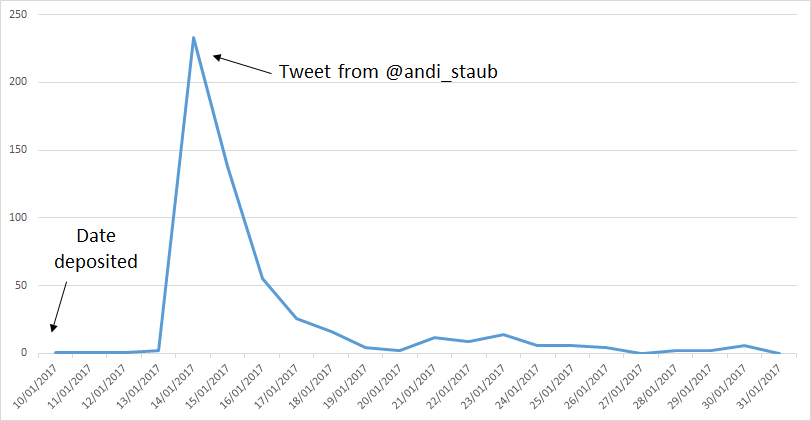

Mapping the site visits and downloads of the paper gives us this graph.

The graph shows that the greatest spike came immediately after broadcast of the programme. But there is a tail of site visits and downloads that coincide with the availability of the programme on iPlayer. It’s a great example of connecting Open Learning and Open Research.

The second story comes from the January downloads and relates to a paper co-authored by Dr Mathijs Lucassen in WELS:

Fleming, Theresa M.; Bavin, Lynda; Stasiak, Karolina; Hermansson-Webb, Eve; Merry, Sally N.; Cheek, Colleen; Lucassen, Mathijs; Lau, Ho Ming; Pollmuller, Britta and Hetrick, Sarah (2017). Serious Games and Gamification for Mental Health: Current Status and Promising Directions. Frontiers in Psychiatry, 7, article no. 215.

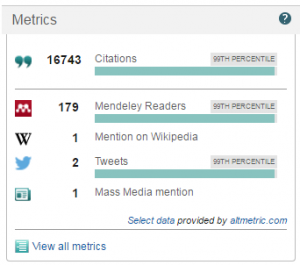

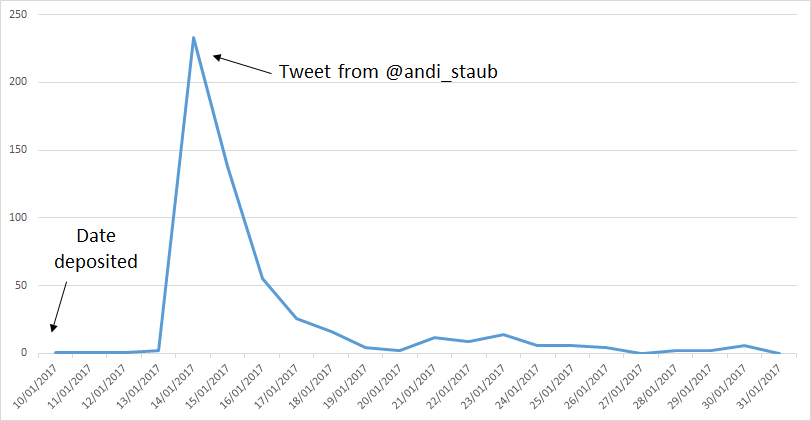

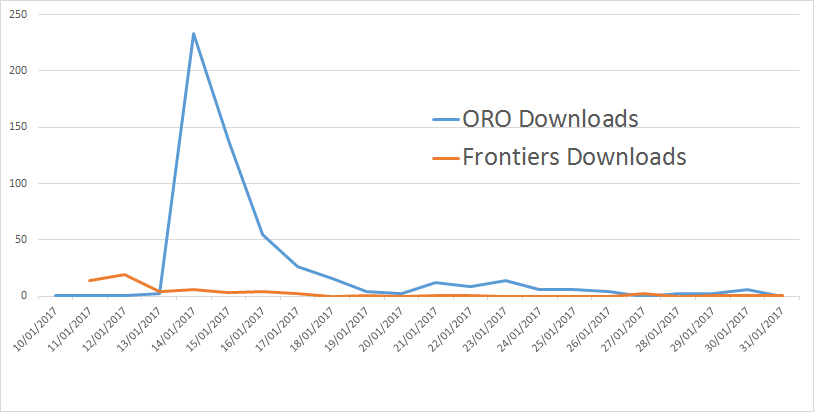

This one went through the roof, with 604 downloads in January making it the second most downloaded item in January (full top 50 below). It was added to ORO on the 10th January and almost immediately picked up in twitter by @andi_staub.

The download pattern show a remarkable a correlation between that tweet and the number of ORO downloads for that article.

Initially I was suspicious that a single tweet could have that impact, even though it did get plenty of likes and retweets. But Andreas Staub is apparently a Top 20 influencer in the world of FinTech. FinTech (Wikipedia told me) “is an industry composed of companies that use new technology and innovation with available resources in order to compete in the marketplace of traditional financial institutions and intermediaries in the delivery of financial services” and got $19.1 bn funding in 2015.

So why might a FinTech influencer be interested in this research? Mathijs gave me some lowdown:

People do seem very interested in serious gaming in mental health…I wonder if it is because people are aware of the addictive potential of commercial games, so they wonder how can a game be therapy? There are some really interesting ones out there (in addition to SPARX – I was a co-developer – Professor Sally Merry has led this work), like “Journey to the Wild Divine” a ‘freeze-framer’ game based on bio-feedback in a fantasy setting. The program is a mind and body training program, and uses biofeedback hardware (e.g. a user’s heart rate) along with highly specialised gaming software to assist in mindfulness and meditation training (e.g. a user has to learn to control their body in certain ways in order to progress through the game)…Plus programs like “Virtual Iraq” (to assist service men and women with Post Traumatic Stress Disorder with their recovery).

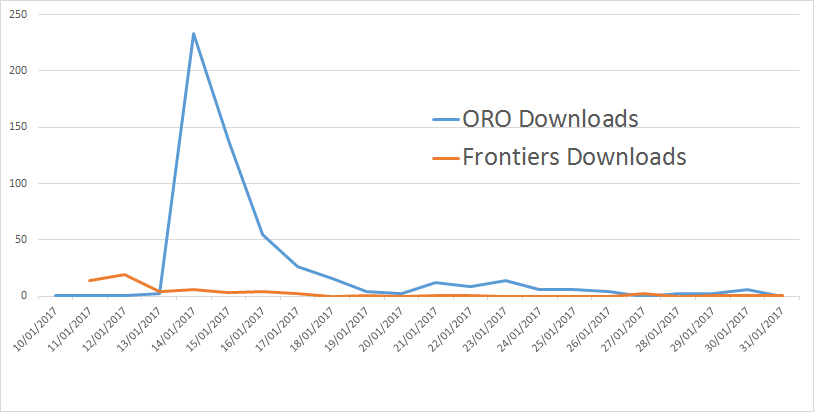

There was one other thing about the downloads for this paper. It was published in an Open Access journal so I’d have expected most downloads to come from the journal site. But the majority of downloads (at least in January following this tweet) were from ORO.

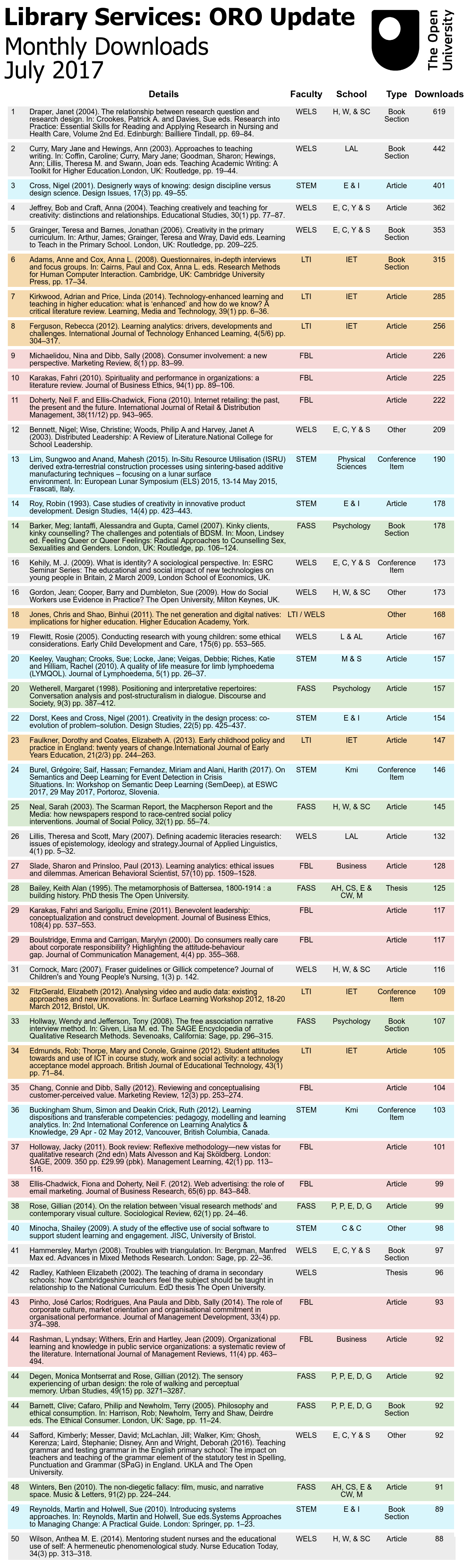

Which indicates to me that Institutional Repositories can be as good as any other platform, whether they are publisher platforms or commercial academic social networking sites, to disseminate your research. Full Top 5o lists for downloads are below: