For a researcher, SocialLearn is a vehicle and innovation platform for probing, and experimenting with, the future shape of the learning landscape.

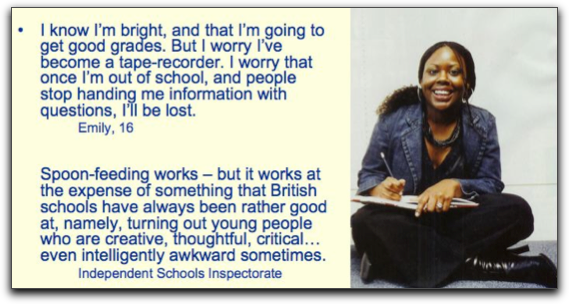

Learning analytics are, bluntly, ‘merely’ technology for assessment. And one of the pivotal debates raging at present is about our assessment regimes. It boils down to What does good look like? “Attainment”, “Progress”, “High impact intervention”, “Quality Students”… all of these rest on foundational assumptions about what it is that we’re trying to accomplish. I am always struck by the slide Guy Claxton uses:

As discussed at length in many other reports and books, a false dichotomy can spring up in a lazy (or politically charged) debates, between on the one hand mastery of discipline knowledge and on the other, transferable 21st century skills — as though the two must be in conflict, or that the 21st century doesn’t also need subject matter knowledge. (See the Assessment and Teaching of 21st-Century Skills project for one perspective on this, and Whole Education for another.) What we know of course is that both are vital, and that while it’s possible to teach just enough for the learner to regurgitate knowledge to pass exams, what we need for society is pedagogy that sparks the desire to learn for life, equips learners to conduct their own inquiries, empowers them apply their knowledge in the real world, and prepares them to thrive under conditions of unprecedented uncertainty compared to those for which our current education systems were designed.

Learning analytics and automated assessment are key technology jigsaw pieces if the global thirst for learning is to be met. You simply can’t do it with paper, bricks+mortar, and human tutors marking everything. It doesn’t scale. Every VLE (or LMS for our non-English readers) now ships with a rudimentary analytics dashboard providing basic summary statistics on relatively low level user activities. The learning analytics horizon is around defining and operationalizing computationally tractable patterns of user activity that may serve as proxies for higher order cognition and interaction.

The Social Learning Analytics programme focuses on what we hypothesize to be domain-independent patterns typical of the Web 2.0 sphere of activities, including social networking analytics, social discourse analytics, and dispositional analytics. We won’t say any more about those for now [learn more].

But here at the OpenU there’s a huge amount of learning technology innovation going on [overview]. While we care deeply about learning analytics for lifelong/lifewide learning, we’re also working on the complementary analytics which will enable very large numbers of learners to receive automated feedback on their mastery levels of a given topic, but through rich user interfaces that move us far beyond the old multiple-choice quiz.

In work led by Phil Butcher on the OpenMark for instance, you’ll find the pedagogical principles underpinning automated assessment for online learners who may be anywhere:

The emphasis we place on feedback. All Open University students are distance learners and within the university we emphasise the importance of giving feedback on written assessments. The design of OpenMark assumes that feedback, perhaps at multiple levels, will be included.

Allowing multiple attempts. OpenMark is an interactive system, and consequently we can ask students to act on feedback that we give ‘there and then’, while the problem is still in their mind. If their first answer is incorrect, they can have an immediate second, or third, attempt.

The breadth of interactions supported. We aim to use the full capabilities of modern multimedia computers to create engaging assessments.

The design for anywhere, anytime use. OpenMark assessments are designed to enable students to complete them in their own time in a manner that fits with normal life. They can be interrupted at any point and resumed later from the same location or from elsewhere on the internet.

The 3 screens below illustrate how some of the interaction paradigms that OpenMark makes possible, but see their website for many more.

It’s also possible to provide formative assessment on short, free text answers to questions, and evaluation of human vs. automated assessment of learner responses is encouraging, e.g.

Philip G. Butcher & Sally E. Jordan (2010). A comparison of human and computer marking of short free-text student responses. Computers & Education, 55, pp. 489-499. DOI: http://dx.doi.org/10.1016/j.compedu.2010.02.012

Abstract: The computer marking of short-answer free-text responses of around a sentence in length has been found to be at least as good as that of six human markers. The marking accuracy of three separate computerised systems has been compared, one system (Intelligent Assessment Technologies FreeText Author) is based on computational linguistics whilst two (Regular Expressions and OpenMark) are based on the algorithmic manipulation of keywords. In all three cases, the development of high-quality response matching has been achieved by the use of real student responses to developmental versions of the questions and FreeText Author and OpenMark have been found to produce marking of broadly similar accuracy. Reasons for lack of accuracy in human marking and in each of the computer systems are discussed.

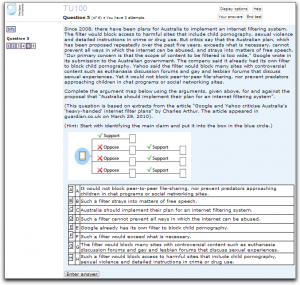

Work by Paul Piwek provides automated assessment of critical thinking skills. Embedded in the OU’s Moodle-based VLE (using OpenMark), is an integrated drag+drop interface for students to complete simple argument map templates, in their analysis of a target text. The screen below shows how the learner drags the statements into the template in order to demonstrate their ability to parse the text into the top level claim, and supporting/challenging premises/sub-premises. Learners can then check their map, which preserves the elements in the correct place, but leaving them to try again with those they got wrong.

So, the exciting thing is when analytics for learning-to-learn come together with analytics for discipline mastery. Without one or the other, we miss the defining contours of the new learning landscape: deep grasp of material, moving from logging low-level actions to higher-order cognition, tracking social learning dynamics, and dispositions for lifelong/lifewide learning.

My current mantra…

Our learning analytics are our pedagogy.

Let’s make them fit to equip learners for complexity.