Dr Mirjam Hauck ~ Academic Co-Lead for AI in LT&A; Eleanor Moore and Mary Simper ~ Learning Designers

The Critical AI Literacy skills framework

As Artificial Intelligence (AI) continues to make its impact felt in HE, influencing everything from the way we search online to how we learn and teach, it’s important that educators adopt principles for embedding responsible and ethical use of new and emerging technologies.

A collaborative project at The Open University (OU) led by Dr Mirjam Hauck, Academic Co-Lead for AI in Learning, Teaching and Assessment, bringing together academics, Learning Design, and the Library – with input from Careers and Employability and consultancy from Professor Mike Sharples (Emeritus, IET) – has resulted in the creation of a framework for the Learning and Teaching of Critical AI Literacy skills.

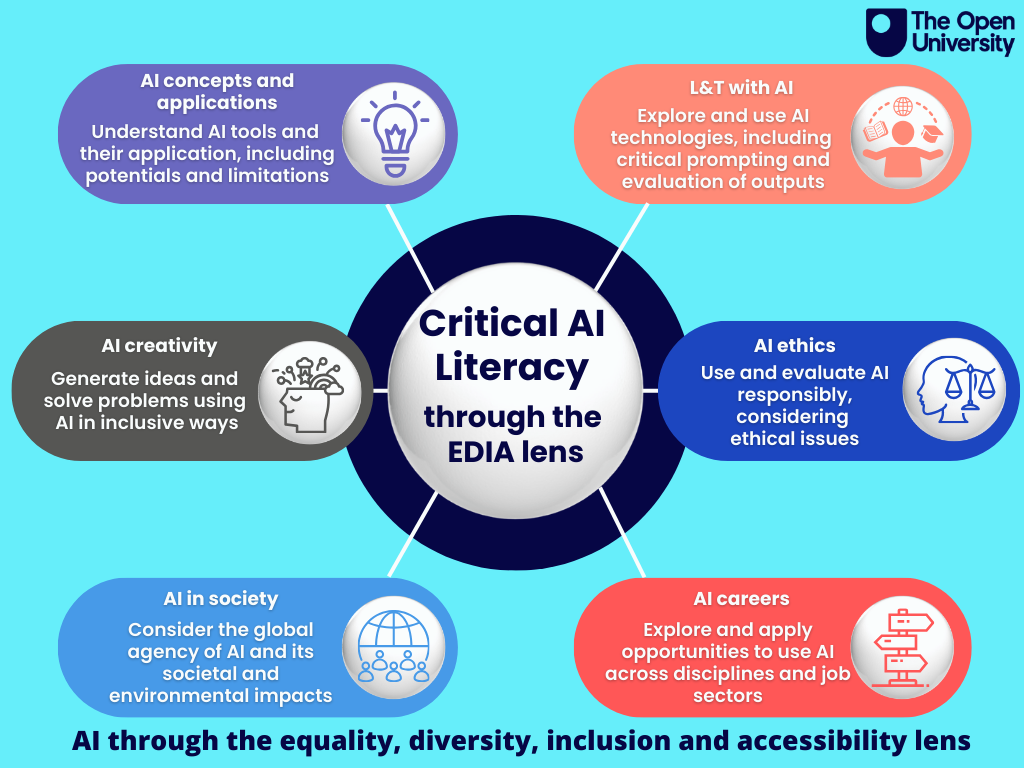

The framework is a practical tool designed to help academic staff and students make sense of AI and apply criticality in its use. It is firmly rooted in equality, diversity, inclusion and access (EDIA) principles and informed by research on Critical Digital Literacy which highlights the influence of power in digital spaces. It explores how knowledge, identities and relationships are shaped to privilege some while marginalising others.

Critical AI Literacy examines how Large Language Models (LLMs) – AI tools which generate human-like responses – contribute to ongoing epistemic injustices (Hauck, 2025).

Why is this framework necessary?

From GenAI that can produce essays, to tools embedded in everyday applications, students and educators need to critically evaluate how AI interacts with their academic and professional lives. This is especially important in an institution like the OU where many learners come from widening participation backgrounds, and access and equity are critical factors.

The framework revolves around six main sections:

-

- AI concepts and applications

- L&T with AI

- AI ethics

- AI creativity

- AI in society

- AI careers

Each section supports educators to embed Critical AI Literacy in LT&A by focusing on the skills that students need to develop. Whether it’s critiquing AI outputs, exploring ethical dilemmas, or imagining creative uses, the framework supports learning agility—a must in our ever-changing AI-driven world. By embracing this framework, staff are not just teaching about AI—they’re equipping students with the skills to shape its future.

We’re interested in exploring and capturing the experiences of individuals who are committed to engaging more actively with the framework. Do contact Mirjam, please, if you are willing to share your experience: [email protected]

The Responsible by Design framework

As a complementary tool, the Responsible by Design framework aims to help staff identify opportunities to explore the ethical implications of AI and embed them in learning materials. When designing the framework, we explored the institutional goals and aligned it with the OU’s Live and Learn strategy, specifically equity, societal impact and sustainability. We also took into account student voice. As evidenced by the results of OU surveys, students are concerned about the ethical implications of GenAI.

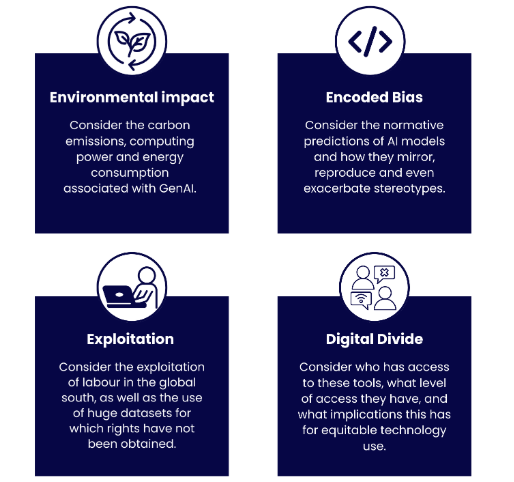

The framework is designed to be easy to use either when creating or reviewing content. There are four pillars as follows:

Let’s explore these pillars in a little more detail:

Environmental impact

The large energy consumption associated with GenAI when compared to other digital tools such as traditional search engines is often overlooked. Awareness raising on this issue is imperative.

Encoded bias

GenAI has the ability to not only replicate, but exaggerate, biases and stereotypes already existing in society. Staff and students need to be made aware of the normative predictions of AI models, so that they can appropriately challenge and critique GenAI output.

Exploitation (of people and resources)

In terms of labour, GenAI has been trained through a process called ‘data washing’, which is where humans sift through data and categorise it. This categorisation process trains GenAI models, but the employees are often underpaid and subject to disturbing language and images without proper support.

In terms of data, GenAI models are trained on huge datasets for which permission has not been given. This has huge implications for intellectual property. It is essential that we teach students and make staff aware about the exploitation of labour and data, so that they can make informed decisions about when to use GenAI.

Digital divide

GenAI is a new and rapidly developing tool that has the potential to deepen the digital divide.

This pillar asks questions such as:

-

- Who has access to the tool, and who can afford access to higher performing versions?

- Of those who have access, who knows how to use the tool effectively to help support them?

- Who is able to critique the tool? How many know and understand the ethical dimensions of tool use?

The Responsible by Design framework offers a solution bank to support staff. Once all four pillars have been reviewed, staff can address ethical implications of GenAI, fostering more responsible learning material.

Alongside the framework, the Learning Design team is also developing resources which can be used to support academics to have conversations with students about the pillars, for example, infographics which explore the environmental impact of GenAI and contextualise AI usage by comparing it to everyday activities. One example from the infographic includes equating an AI-generated image to a full charge of a smartphone. So, although educators should be encouraging experimentation with AI, it’s vital to raise awareness of its ethical implications and ask, ‘should I be using AI for this task? Is there a better option such as reusing materials or finding an image from a website?’ Just as we would stop and think, ‘do I need to buy a new single-use plastic bag?’ we should question our responsibility in the face of AI use.

We hope that you enjoy exploring both the OU Critical AI Literacy skills framework and the Responsible by Design framework. We’d welcome your feedback and would love to know how you are approaching responsible and ethical use of AI in your institution. Please contact us at: [email protected].

The image used in this banner comes from https://betterimagesofai.org/ a website dedicated to researching, creating and curating Better Images of AI.

Banner image credit: Luke Conroy and Anne Fehres & AI4Media / Better Images of AI / Models Built From Fossils / CC-BY 4.0