Before I joined the OU, my background was in risk-based decision-making. I looked forward to finding innovative ways of gathering evidence of the impact of public engagement with research (PER). However, it seemed like whenever PER was mentioned evaluation would either become the pink elephant in the room or be quickly forgotten, and the conversation would focus on public engagement as opposed to public engagement with research.

In my experience, this doesn’t arise from ill intent but rather from a lack of understanding about the affordances of different PER activities and the methods and techniques used to evaluate the impact of PER.

This seminar was an opportunity to test a theoretical framework that I believe has the capacity to address this issue.

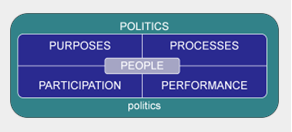

As the evaluation researcher on Engaging Opportunities and the Catalyst for Public Engagement with Research, my focus is on developing appropriate plans for evaluating the impact of PER. This is made easier by tools such as the 6 Ps, which Richard Holliman described in this post.

The six dimensions of engaged research help researchers to consider:

- Public: who they intend to engage with

- Purpose: why they want to engage (purpose)

- Processes: how they intend to engage

- Participation: how the public(s) will interact with their research

- Performance: how performance will be measured

- Politics: what wider and local political issues need to be considered

However, planning and carrying out evaluation can still be daunting for people who aren’t familiar with qualitative and semi-quantitative methods of data collection and analysis. To address this, the theoretical framework offers a strategic approach for evaluating PER.

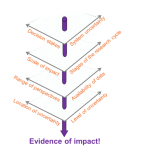

To introduce the group to the logic behind the framework, I asked them to discuss the values of the four kinds of activity we are using in Engaging Opportunities (open lectures, open dialogues, open enquiry, open creativity). I then asked them to position the activity on a schematic representing the levels of uncertainty that characterise the different stages of the research cycle (x axis) and the scale of impact (primary, secondary, tertiary) (y axis). This revealed the affordances that the group felt each type of activity had to offer. Luckily the group did see a distinction between the affordances offered by the different activities, which made for an easy transition into the theoretical framework (which is based on the concepts described by Funtowicz and Ravetz (1990)).

Assuming the group had articulated a set of simple PER objectives, in theory they could use this framework to determine:

- the type of knowledge characterising each of their objectives

- the types of activity they should consider using

- the types of methods and techniques that would be suitable for evaluating the impact of their PER

To demonstrate, I gave an overview of the evaluation I had carried for our 2013 (open creativity) media training programme, finishing with two questions

- Do you see value in this approach?

- What’s the most pressing issue with the PER agenda?

Please use Comments to send me your thoughts.