You may be relieved to hear that this will be my final posting (at least for a while) on our use of different variants of interactive computer-marked assignment (iCMA) questions. We know that, whilst the different variants of many questions are of equivalent difficulty, we can’t claim this for all our questions. How much does this matter? A typical iCMA might include 10 questions and contribute something between 2 and 5% to a student’s overall score. My Course Team colleagues and I have reassured ourselves that the effect of the different difficulty of some variants of some questions will, when averaged out over a large number of questions, have minimal impact on a student’s overall score. But is this true?

At the International Computer Assisted Assessment Conference (CAA 2010) held in Southampton in July, John Dermo presented a very interesting paper on similar subject. John had asked a similar question for the situation where e-assessment test items are selected from a pool of questions, assumed to be of similar difficulty. John used a relatively sophisticated analysis (Rasch analysis) and reached the conclusion that, although the selection of questions from a pool had little effect on overall scores, there were a small number of borderline students who had been advantaged or disadvantaged by it, and that it was therefore worth trying to compensate for the effect.

After reading up about Rasch modelling and trying to get it to fit our data, we concluded that this approach would not be valid for OpenMark iCMA questions. This is for two reasons

- The Rasch model assumes that partial credit implies a partially correct solution. In OpenMark, partial credit is most usually awarded for a completely correct solution at a second or third attempt.

- The Rasch model assumes unidimensionality i.e. that all the questions are assessing the same thing.

So, for now, we have used a much simpler approach. We know that the mean score of a variant is not a particularly good measure of how difficult a particular variant is, but it does have the merit of being easy to work with. So…

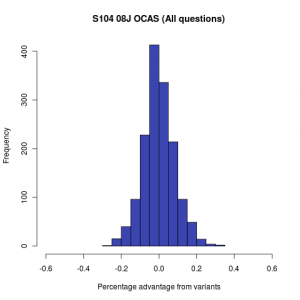

We calculated the mean score for each variant of each question, and compared it with the mean score for the entire question. If, for example, a variant had a mean score of 2.1, but the mean score for the whole question was 2.4, then we concluded that students who had answered this variant had been ‘disadvantaged by 2.4 – 2.1 = 0.3 marks’. We repeated this process for all the questions answered by each individual student and weighted the ‘advantage’ or ‘disadvantage’ in the same way that iCMA marks are weighted within the course – and so we arrived at a number representing the overall effect on that student’s score. We repeated this process for each student on the course and plotted a histogram of the amount by which they were advantaged or disadvantaged.

The result for the continuous assessment component of S104 Exploring science is shown to the left. The effect of the different variants of iCMA questions accounts for a difference in grading of less than +/-0.4%. Compared with the known variation in human grading, this is pretty negligible! However, in line with John Dermo’s finding, it means that we should continue to look at individual borderline cases carefully.

The result for the continuous assessment component of S104 Exploring science is shown to the left. The effect of the different variants of iCMA questions accounts for a difference in grading of less than +/-0.4%. Compared with the known variation in human grading, this is pretty negligible! However, in line with John Dermo’s finding, it means that we should continue to look at individual borderline cases carefully.

We could adjust students’ overall results to compensate for this effect, but there are various theoretical and practical objections to this. In particular, consider a student who was given an easy variant and gets full marks. Is it reasonable to reduce their mark because the variant was easy? If they are an able student, they would have got full marks even on the most difficult variant, so should we penalise them for being given an easy variant? Similarly, it doesn’t seem fair to increase the mark of a low ability student who was given a difficult variant and got it wrong – we have no proof that they would have done any better with the easy variant.

In general terms, the more iCMA questions that are used, and the lower their weighting, the smaller the effect will be. It is important that we remember these things, and use sufficient questions. In addition, again in line with John’s conclusions, it is important that question authors are alerted to situations where different variants have different difficulty, so that they can investigate the reasons for this, and improve their questions wherever possible.

Pingback: e-assessment (f)or learning » Blog Archive » iCMA statistics

Hi Sally,

Only now found this older post. We use variants of questions in our numerical question types too. As Questionmark Perception cannot create dynamic variants on the spot (as for instance Maple TA does), we create all variant questions in Excel and then import them into QMP. Using Excel brings the advantage of being able to fine tune what variables will be used and how. So no ‘ugly’ questions.

It would be interesting for us to use the same approach to try to measure the difficulty of each variant.