Hot on the heals of my previous post, I’d like to make it clear that human markers sometimes do better than computers in marking short-answer [less than 20 word] free-text questions.

I have found this to be the case in two situations in particular:

- where a response includes a correct answer but also an incorrect answer;

- where a human marker can ‘read into’ the words used to see that a response shows good understanding, even if it doesn’t use the actual words that you were looking for.

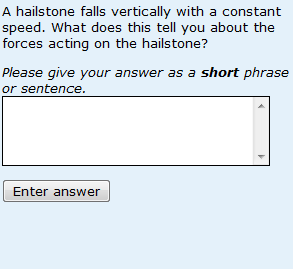

In the case of (1) I’d like to explain a bit more about what I mean. I’m not saying that students deliberately include both a correct and an incorrect response e.g. in response to the question shown on the left, students don’t write ‘ The forces are balanced. The forces are unbalanced.’ in order to fool the system.

In the case of (1) I’d like to explain a bit more about what I mean. I’m not saying that students deliberately include both a correct and an incorrect response e.g. in response to the question shown on the left, students don’t write ‘ The forces are balanced. The forces are unbalanced.’ in order to fool the system.

The only time when you see a response like this is when a student has given the wrong answer, received feedback to tell them that this is wrong and have then added the (opposite) correct answer, forgetting to remove the incorrect one. Whether that should be marked right or wrong is open for discussion, as is whether we should be offering multiple attempts at questions with ‘opposite’ right and wrong answers. Here my point is simply that students do not attempt to cheat the system by deliberately giving two opposite responses at the same time.

What happens more frequently is that students give the correct response but then, in attempting to explain their answer, they go on to add text which illustrates a lack of understanding. For example ‘The forces are balanced [so far so good] with the force of gravity being greater than the force of air resistance [oops].’