I’ve been looking at student responses to our short-answer free-text questions. I’ll start by considering something simple; how long are the responses? It turns out that the length of student responses varies considerably from question to question. In this posting I will consider responses to the question shown below:

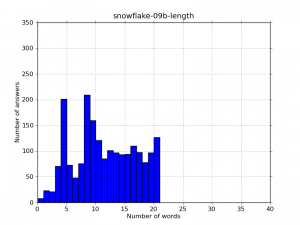

Initially we only used the questions formatively and we didn’t tell students how long we expected their responses to be. The length distribution of 888 responses to the snowflake question is given below:

The distribution is bimodal with peaks on one word (corresponding to responses such as ‘balanced’) and three words (corresponding to responses such as ‘They are balanced’ and ‘equal and opposite’). There are a small number of very long responses.

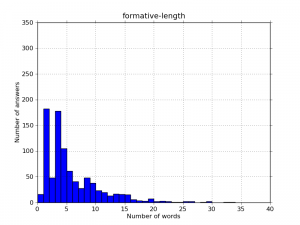

The first time this question was used in low-stakes summative use, we again didn’t tell students how long we expected their responses to be, although the general instructions implied that they should answer in a single sentence. The length distribution (below) of 2057 responses is again bimodal but the peaks are now on 3-4 words (e.g. ‘The forces are balanced’) and 8-9 words (e.g. ‘The forces of gravity and air resistance are equal’).

There are more excessively long responses, such as the following one (64 words):

Gravity from the earth core is pulling on the snow flake and no other force is acting on it for example wind. When the snow flake reach the ground there are two forces acting on it. One is the gravitational pull from the earth and the upward force from the ground. Both these force are balance which means that the snow flake is statioary

The answer matching generally coped well with responses of all lengths, but very long responses are more likely to contain a correct response within an incorrect one, identified by Tom Mitchell as being the biggest problem area for the automated marking of short answer questions. For this reason, we decided to impose a filter that meant that only responses of 20 words or fewer would be accepted for marking (if students gave an answer that was too long, they were told and given a ‘free go’ in which to shorten their answer. The warning ‘You should give your answer as a short phrase or sentence. Answers of more than 20 words will not be accepted.’ was added to all questions.

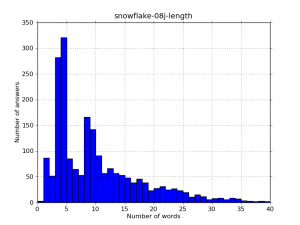

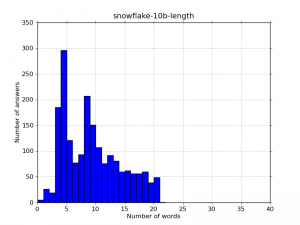

So what was the effect? You may have guessed. The length distribution of 1991 responses is shown below:

There are still peaks at 4 words and 8-9 words and the filter has obviously been effective in removing the excessively long answers. But the general trend is towards longer answers (the mediam has increased from 8 words to 10 words); some students believed that we were telling them that we expected the answer to be 20 words long and so they gave responses that were unnecessarily long.

So we have removed the specific instruction about length. We now just tell students: ‘You should give your answer as a short phrase or sentence’ and they are only told that their response needs to no more than 20 words if their original response is longer than this. The latest length distribution (for 1919 responses) is shown below. The bulk of responses with length of 20 words or the high teens has gone, and the median has returned to 8 words. Phew!

There is an important general point here – students do what they believe ‘the system’ wants them to do in order to get the marks; sometimes they are receiving an unintentional and incorrect ‘hidden message’.

Pingback: e-assessment (f)or learning » Blog Archive » Helpful and unhelpful feedback : a story of sandstone