I am surprised that I haven’t posted the figure below previously, but I don’t think I have.

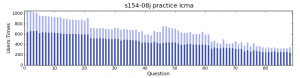

It shows the number of independent users (dark blue) and usages (light blue) on a question by question basis. So the light blue bars include repeating of whole questions.

This is for a purely formative interactive computer-marked assignment (iCMA) and the usage drops off in exactly the same way for any purely formative quiz. Before you tell me that this iCMA is too long, I think I’d agree, but note that you get exactly the same attrition – both within and between iCMAs – if you split the questions up into separate iCMAs. And in fact the signposting in this iCMA was so good that sometimes the usage INCREASES from one question to the next. This is for sections (in this case chemistry!) that the students find hard.

The point of this post though is to highlight the danger of just saying that a student clicked on one question and therefore engaged with the iCMA. Just how many questions did that student attempt? How deeply did they engage? The situation becomes even more complicated if you consider the fact that there are around another 100 students who clicked on this iCMA but didn’t complete any questions (and again, this is common for all purely formative quizzes). So be careful, just using clicks (on a resource of any sort) does not tell you much about engagement.