In my last post, just over a month ago (sorry folks, I’ve been a bit busy) I was ambivalent about the news that GCSEs in England are to be replaced by an ‘English Baccalaureate’ and the more general trend towards increasing use of examinations and decreasing use of other methods of summative assessment.

My views have now firmed up a bit – I don’t like it! Yes, I had a very conventional education with lots of exams (O-levels, A-levels and a degree in the 1970s) and I thrived on it. But many didn’t. And does the fact that I was good at exams mean that I was good at everything those exams were supposedly assessing? I rather doubt it.

This brings me to the issue of (lack of) authenticity. There are many skills that are very important in real life that are rather difficult to assess by exam. And of course there are other ways (though perhaps not quite so watertight) to reduce plagiarism – ask questions that expect students to use the internet, referencing their sources; ask questions that expect students to work together, reflecting on the process.

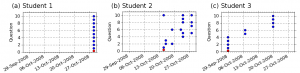

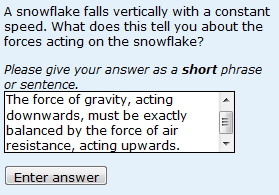

I’d like to concentrate on the difficulty of assessing investigative science by exam. At a meeting yesterday, Brian Whalley highlighted the difficulty in assessing field work in this way. It is possible to assess some skills in practical science by exam – back in the 1970s I did physics practical exams alongside written A-level papers, but these generally just expected you to demonstrate a particular technique that you had practised and practised and practised. There are far more appropriate methods for assessing the sort of investigative science that real scientists do. Please UK government, think carefully before imposing exams in places where they do not belong.

I’d like to concentrate on the difficulty of assessing investigative science by exam. At a meeting yesterday, Brian Whalley highlighted the difficulty in assessing field work in this way. It is possible to assess some skills in practical science by exam – back in the 1970s I did physics practical exams alongside written A-level papers, but these generally just expected you to demonstrate a particular technique that you had practised and practised and practised. There are far more appropriate methods for assessing the sort of investigative science that real scientists do. Please UK government, think carefully before imposing exams in places where they do not belong.