The first 2021 seminar of the Special Interest Group focused on Artificial Intelligence in Education (openAIED SIG) was led by Josmario Albuquerque, a second-year PhD student at the OU Institute of Educational Technology, on Wednesday 7th July.

With a background in Computer Science, Josmario has been involved in IT projects related to Artificial Intelligence in Education, Learning Analytics, and the use of Computer Science to address social issues. His current research fits under the scope of these past projects, since he is studying group biases in online learning settings. During the seminar, Josmario suggested that human biases and stereotypes are still present in educational settings, diminishing several aspects of learning.

It is necessary to detect those issues related to social justice that can jeopardise students’ academic performance, confidence, mental health and engagement when learning online. Hence, he is investigating technologies that can help uncover potential group biases within online education.

He referred to group bias as any inclination or favouritism towards people belonging to particular groups (Greenwald & Krieger, 2006). In online learning, external and internal bias can occur when designing a course or giving feedback to students. However, there is a need to identify those social favouritisms before addressing them appropriately.

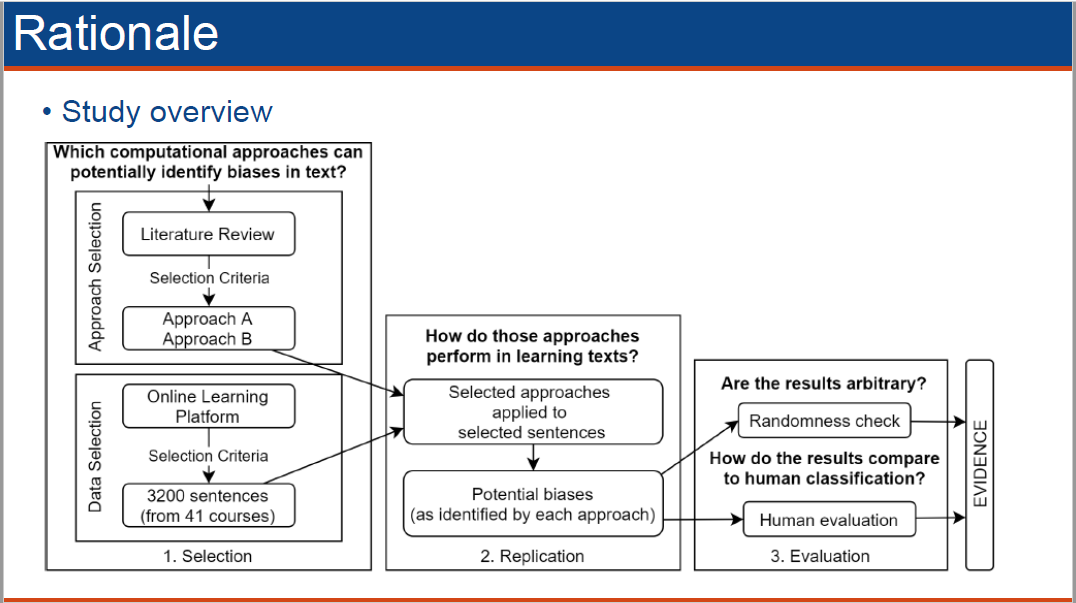

Numerous approaches have been used to detect group bias in other fields, such as self-reports, psychological experiments and discourse analysis techniques. In Josmario’s study, bias-detection computational approaches were selected, replicated and tested to uncover potential group biases from learning materials presented in an online learning platform.

After conducting a thorough literature review that included 84 studies, he identified two computational approaches that could potentially detect biases in text. The former approach focused on gender bias and the latter on subjective language. The two bias-detection algorithms are generally used in job advertisements and Wikipedia, respectively.

Then, 2024 sentences from 41 online courses across eight disciplines were classified by the two bias-detection algorithms. While potential biases were suggested by those approaches within the modules analysed, the extent to which those biases are relevant for an educational setting is questionable.

One of the reasons for questioning the outcomes obtained from the two approaches was the lack of context that made it hard to judge the relevance of each instance. Josmario said:

Context matters. Regarding the gender approach, for example, I don’t think its inferences are accurate as it was designed for job advertisements. Anyway, I think that without context, it will be hard to tell which approach is doing well or not.

He also pointed out that automatic identification of group bias in educational texts is scarce. Similarly, none of the approaches reviewed in the literature focused on racial discrimination. He concluded his presentation by stating:

The use of technology for identifying potential biases in online learning seems promising towards fair educational settings. Future works may benefit from accounting for context-sensitive biases and focus on underrepresented populations, particularly individuals from black and minority ethnic groups.

Josmario’s presentation led to a thought-provoking discussion afterwards. Attendees also reflected on their practice at work. One of them said: “There is a lot of power in the words and the connotations of those words we use when communicating a message in our daily basis”. Therefore, it is essential to acknowledge and address those potential biases to improve not only the development of learning materials but also the performance and well-being of students learning online.

If you would like to keep up to date with the openAIED SIG agenda, don’t hesitate to get in touch with us to add your name to the openAIED mailing list.

Greenwald, A. G., & Krieger, L. H. (2006). Implicit bias: Scientific foundations. California law review, 94(4), 945-967.