You are here

- Home

- Blog

- Ethically deploying AI in education: An update from the University of Warwick’s open community of practice

Ethically deploying AI in education: An update from the University of Warwick’s open community of practice

By Isabel Fischer, Leda Mirbahai, Lewis Beer, David Buxton, Sam Grierson, Lee Griffin, and Neha Gupta

Having deployed AI in education for several years, by developing, for example, a student-facing AI-based feedback tool for assignments, generative AI has propelled our agenda forwards. The purpose of our community of practice is to support students and educators who want to use AI, to be able to do so in an ethical and meaningful way.

In partnership with other universities and stakeholders, we created a community of practice focused on the use of AI in education. Our aim is to support universities in pivoting towards a culture that embraces the ethical use of AI and facilitates its integration, while being mindful of both the opportunities and risks it brings. Our community of practice builds on several overarching principles:

- Educators’ choice: Lecture content, applied methods, assessments, and ways of marking should not be the same across all modules, and educators therefore require freedom to decide whether to adopt AI or not for any aspect of their work.

- Supporting educators in their choice(s): Educators should feel supported in their decision about whether or not to adopt AI, and in case of adoption should receive adequate guidance. See, for example, advice on integrating AI into assessments here: https://warwick.ac.uk/fac/cross_fac/academic-development/assessmentdesign/assessmentdesignprinciples/

- Ensuring Fairness: Whether educators adopt AI or not, educators need to ensure that their decision is fair for all students no matter their background, demographic or other protected characteristics, such as a visible or invisible disability. Fairness includes supporting all students as well as discouraging and preventing behaviour that would give an unfair advantage or otherwise conflict with the principles of academic integrity.

- Encouraging students to think critically: Generative AI is sometimes seen by students as a ‘replacement’ for their own thinking because of its speed and eloquence. Instead AI, including ChatGPT, should be deployed as a tool to encourage critical thinking, for example by exploring different perspectives, challenging assumptions, and evaluating evidence.

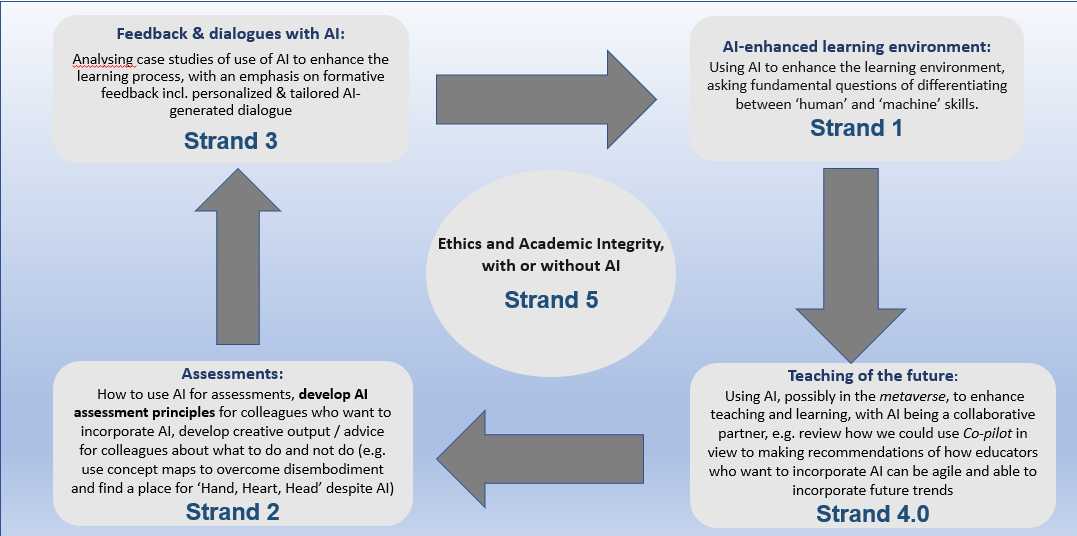

To work towards the above we created five strands, or sub-groups, with different foci. Figure 1, below, outlines the different strands and shows the relationship between them, starting with more generic and fundamental ‘big questions’ - strand 1 - feeding into strand 4.0 (teaching), strand 2 (assessment), and strand 3 (feedback). All work is underpinned by Ethics and Academic Integrity (strand 5).

If you are interested in joining this community of practice, please contact Isabel (Isabel.fischer@wbs.ac.uk), indicating your strand(s) of interest.

SCiLAB is grateful for this guest blog which was submitted following the joint SCiLAB/Warwick seminar on 23 March 2023, ‘Starting out in the Scholarship of Teaching and Learning: Stories from the Chalk Face - Insights and Innovations’.

References

- Image sourced from DALLE.E 2 by OpenAI - retrieved 15/3/23 using these prompts: ‘Interdisciplinarity Creating Digital Futures’