First of all, for the benefit of those who are not native speakers of English, I ought to explain the meaning of the phrase ‘It’s just not cricket’. The game of cricket carries connotations of being something that is played by ‘gentlemen’ (probably on a village green) who wouldn’t dream of cheating – so if something is unfair, biased or involves cheating it is ‘just not cricket’. This post is about bias and unfairness in assessment.

© Copyright Martin Addison and licensed for reuse under this Creative Commons Licence

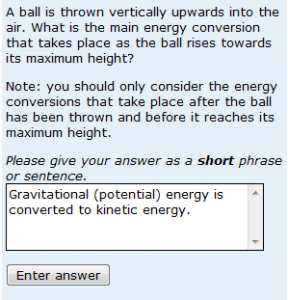

It is reasonable that we do all we reasonably can to remove unfair bias (e.g. by not using idiomatic language like ‘it’s just not cricket’…) but how far down this road it is appropriate to go is a matter of some debate. Some of my colleagues love to make their tutor-marked assignment questions interesting and, by coincidence, some of them are rather fond of cricket…so we end up with all sorts of energy considerations relating to cricket balls and wickets. Is that fair? Are students who don’t know about cricket disadvantaged? Or perhaps it is the students who know lots about cricket who are disadvantaged – they may try to interpret the question too deeply. In situations like this, I insist on including an explanation of the terms used, but I sometimes wonder if we’d be better not trying to make assessment ‘interesting’ in this way. The sport gets in the way of the physics. Continue reading