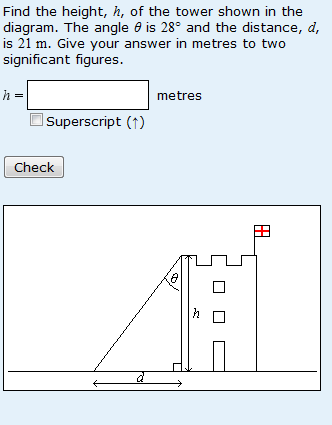

It is not a mistake that I start this post with a screenshot of the same variant of the same question that I was talking about last time.

It is not a mistake that I start this post with a screenshot of the same variant of the same question that I was talking about last time.

I said that 8.2% of responses got the trigonometry or the algebra wrong. The problem is that 6.2% of responses are wrong simply because they can’t round correctly.

To five significant figures, the correct answer is 39.495 metres. As I’ve said before, people seem to be very poor at rounding this sort of number. The correct answer, to the requested two significant figures, is 39 metres, but those 6.2% of responses give the answer of 40 metres.

There are two lessons here

1. Improve the teaching on rounding (already done);

2. Don’t assume you know what students will do wrong in e-assessment questions. Look at real responses from real students. I don’t apologise for saying that yet again. If you don’t do this you will be left as I have been left here with a question that, for a sizeable fraction of students, is not assessing what the author (me!) intended.