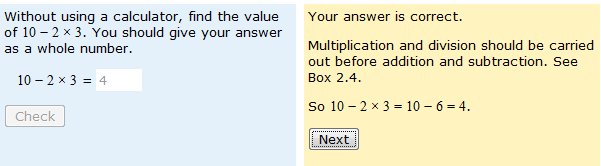

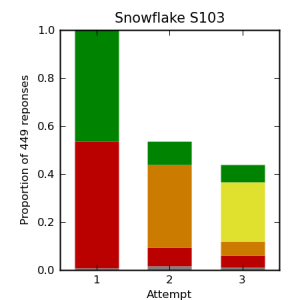

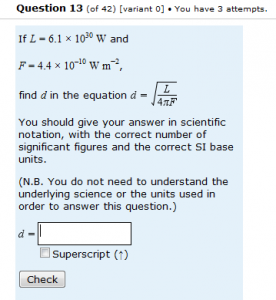

I know I keep banging on about the importance of monitoring your questions when they are ‘out there’, being used by students. If what follows appears to be a bit of a trick (and in a sense it is), it’s a trick with firm foundations – the monitoring of many thousands of student responses. Continue reading

-

Archives

- December 2019

- July 2018

- October 2017

- August 2017

- July 2017

- November 2016

- September 2016

- May 2016

- February 2016

- January 2016

- November 2015

- October 2015

- July 2015

- June 2015

- March 2015

- January 2015

- December 2014

- November 2014

- October 2014

- September 2014

- August 2014

- May 2014

- March 2014

- February 2014

- January 2014

- December 2013

- November 2013

- October 2013

- August 2013

- July 2013

- June 2013

- May 2013

- April 2013

- March 2013

- February 2013

- January 2013

- December 2012

- November 2012

- October 2012

- September 2012

- August 2012

- July 2012

- May 2012

- April 2012

- March 2012

- February 2012

- January 2012

- December 2011

- November 2011

- October 2011

- September 2011

- August 2011

- July 2011

- June 2011

- May 2011

- April 2011

- March 2011

- February 2011

- January 2011

- December 2010

- November 2010

- October 2010

- September 2010

- August 2010

- July 2010

-

Meta